Machines That Build Themselves

Machines That Build Themselves

Using Claude Code for development lately has been one of the most motivating, productivity-boosting experiences I've had in years. The speed, the feedback loops, the way it suggests next steps. It gives you this constant feeling that anything is achievable.

And at some point I caught myself thinking: I don't just want this for coding. I want this for everything that I do.

It Started With Memory

It started with my language learning app, Babblo. To make the AI personas feel real, they had to remember previous conversations. As soon as the AI forgets, the illusion breaks. So I built a rudimentary memory system to keep continuity alive.

Around the same time, I was experimenting with Claude Code to configure automations in n8n, an open source version of Zapier. I was using Claude Code to generate automation logic and then manually checking the configuration. And I hit a moment of realization:

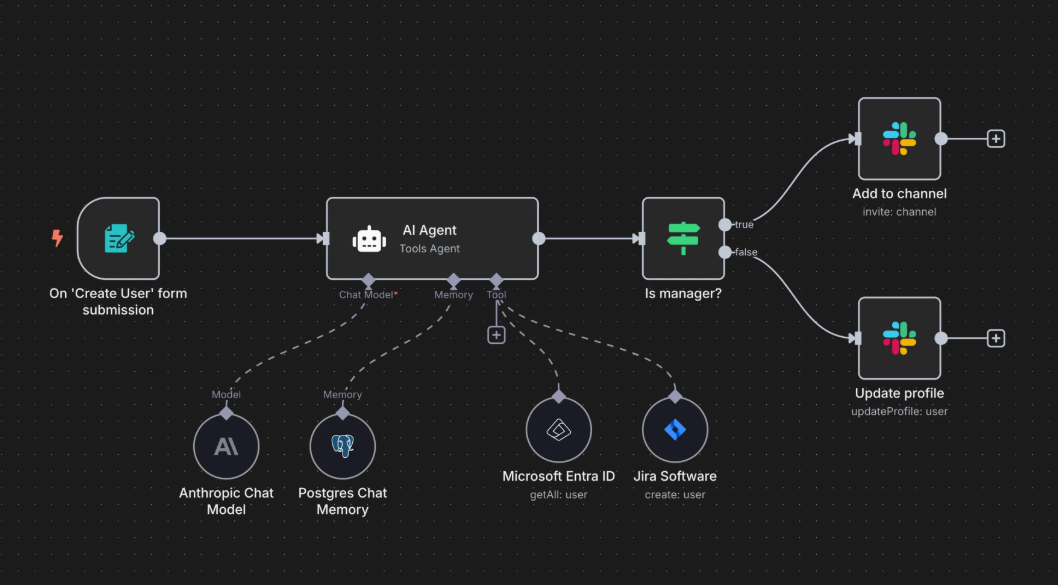

Automation with n8n

Automation with n8n

Sure, n8n makes it easier for humans to build complex automations. But it makes no difference to Claude Code. Claude Code could just build this as a black box with Python, Rust, n8n or any other method. I only care about the outcome.

Once I realised I could push any kind of information into persistent memory (notes, actions, context) and invoke real-world actions, I stopped thinking of it as "memory" and started thinking of it as a personal assistant.

From Notes to an Assistant (By Accident)

I built a simple MCP-backed system for notes and actions. Claude Code became the natural language interface. Originally, I designed it so that when I added an action, Claude Code would ask me to categorise it and rate urgency and importance.

But once it was live, Claude Code just started guessing those values itself. And it was almost always right. That was my first real "oh" moment. I had set my expectations far too low.

Then things escalated. Missing feature? "Want me to add that?" Done in minutes. Bug found? Fixed on the fly.

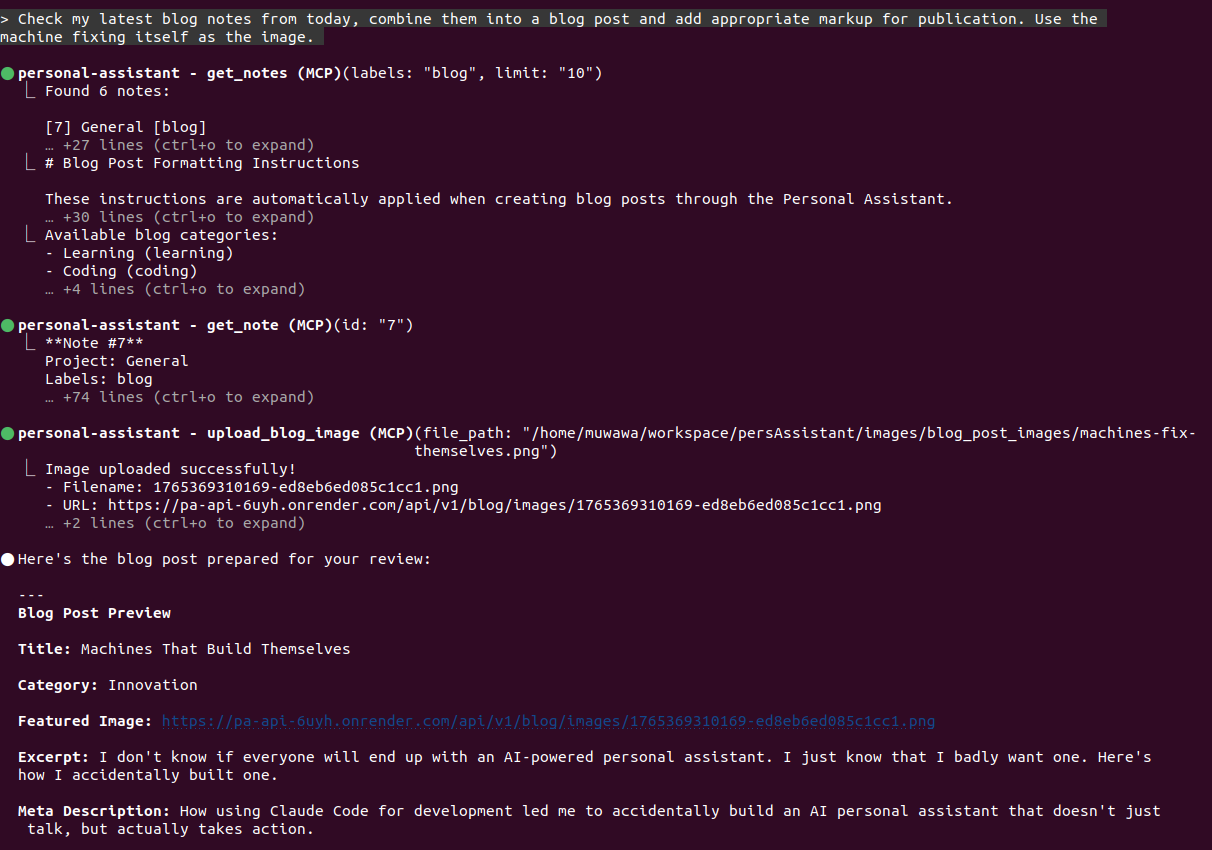

Update 17 Nov: Another amazing example of how functionality emerges without me asking: I was working on a new blog post and when I had published it, my personal assistant said that it had noticed an action item corresponding to the work we had just done, and asked whether it would like me to mark it as done. Love it!

Why Most AI Agents Feel Limited

Claude Code feels "alive" because it has a degree of control over my laptop, the ability to take action, agency. It sees all my files and modifies them, it runs commands, it checks state, it makes changes that are impactful and obvious to me.

My MCP-backed personal assistant expands that agency beyond my laptop. Most agents can talk. Some can read. Very few can act.

That's the MCP divide. Without that, agents feel clever but isolated. With it, they start to feel embodied.

Automation with Claude Code

Automation with Claude Code

The Assistant I Actually Want

A real personal assistant filters noise, organises priorities, tracks actions, schedules time, prepares information for quick decisions, handles logistics, and fetches coffee.

An AI personal assistant can already do most of this, faster, cheaper, and without friction. I want my personal assistant to tee up the most important things I need to do today in a way that makes them feel achievable. Everything else is handled, deferred, or waiting for approval.

Open Build

If you're curious, I'm sharing the core of it openly here: GitHub - Personal Assistant Repository

And if your instinct after reading this is "I'm working on something similar, want to collaborate?" then I'd genuinely love to hear from you.

The most interesting versions of this future are still being built quietly, by individuals, in evenings and weekends. That's usually where the good stuff starts.