Opus 4.5 is smarter than my dog

As machines become better at tasks we once considered uniquely human, writing, reasoning, coding, conversing, an odd pattern keeps repeating. Each time a system crosses a new threshold, many people insist: that doesn't really count as intelligence. The Turing test seems almost quaint now. Of course LLMs can pass the Turing test, but is that really a sign of intelligence?

The interesting question is no longer whether machines can behave intelligently, but why so many people resist calling that behavior intelligence at all.

The unsolved-maths test

A common objection goes something like this: "AI isn't really intelligent because it can't generate truly original ideas. It can't solve unsolved maths problems for example."

At first glance, this sounds reasonable. Until you notice the benchmark being set.

The overwhelming majority of humans, indisputably intelligent beings, cannot solve unsolved maths problems either. Most people will never prove a new theorem, invent a new branch of mathematics, or produce anything genuinely novel in that sense. Yet we don't conclude from this that humans are "not really intelligent."

Instead, we apply a different standard. Humans are allowed to be intelligent on average. Machines are required to be exceptional. For AI, intelligence is judged not by what it commonly does, but by what human geniuses can do.

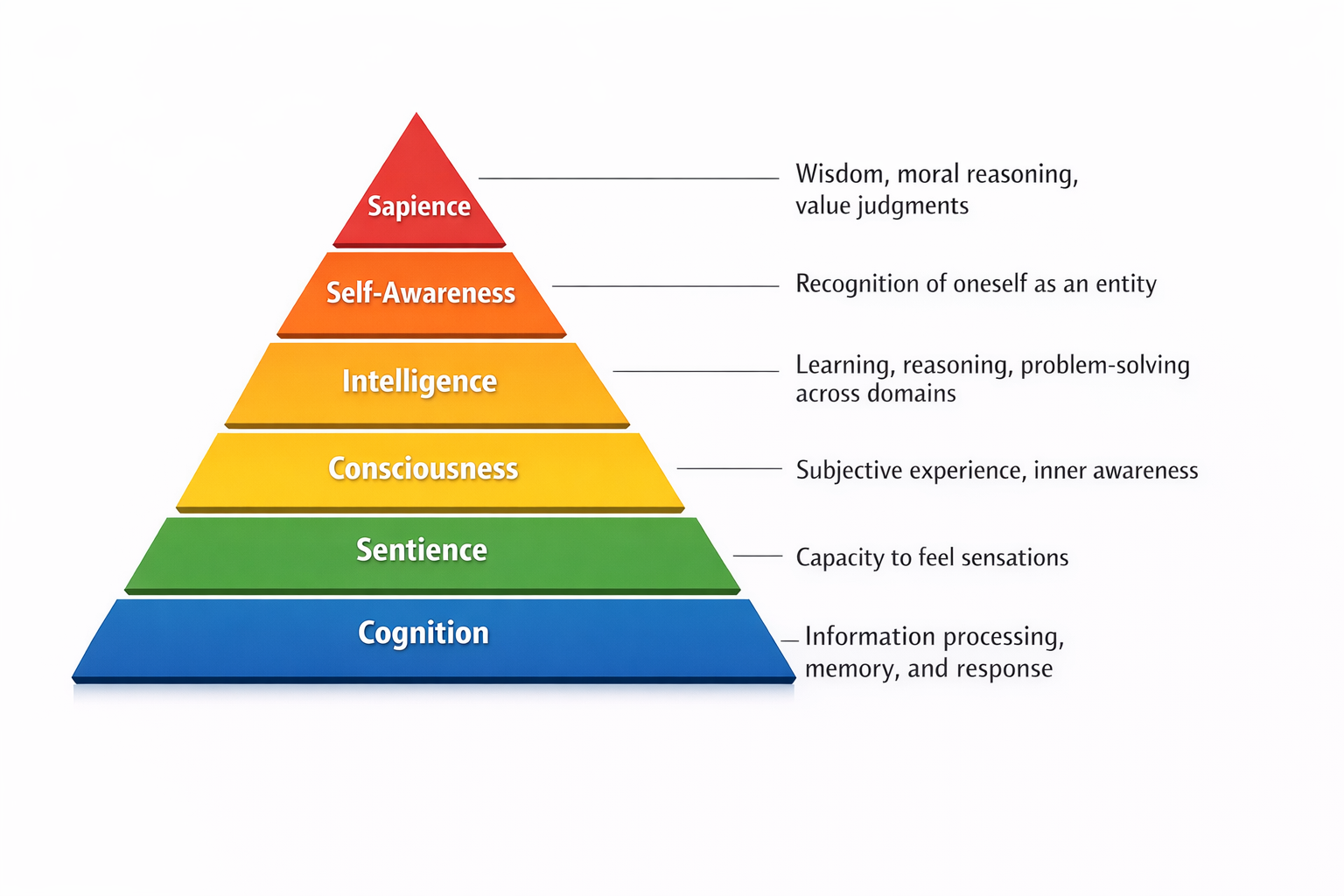

A ladder of mental traits

When we talk about "intelligence," we often mix several distinct traits and this is part of the confusion.

Intelligence is often treated as if it must come bundled with consciousness, selfhood, or moral depth, especially when machines are involved. LLMs are showing us that this is not necessarily correct.

Intelligence is often treated as if it must come bundled with consciousness, selfhood, or moral depth, especially when machines are involved. LLMs are showing us that this is not necessarily correct.

The double standard

I don't know about Yann LeCun's cat, but LLMs are smarter than my dog! We are remarkably generous when judging biological systems. Dogs are described as intelligent, emotional, even empathetic, on relatively thin evidence. We infer rich inner lives based on familiarity, shared biology, and evolutionary continuity.

With machines, the same behavior is dismissed. A system can outperform humans in reasoning tasks and still be described as "just statistics" or "mere pattern matching." Identical capabilities are judged differently depending on what the thing is made of.

This isn't a scientific distinction so much as an intuitive one.

The moving goalposts

This leads to what's often called the AI effect. Each time a machine succeeds, the definition of intelligence shifts:

- Chess was once the benchmark, until computers mastered it

- Then vision and speech were benchmarks, until machines did those too

- Language and reasoning followed the same path

Intelligence becomes whatever machines can't yet do. Once they can, it is reclassified as automation, optimization, or imitation.

A deeper resistance

Is this just sloppy language? I don't think so. There's a more philosophical reason for the resistance. You know you are conscious because you experience the world directly. You do not, and cannot, know this about anyone else. For other humans, you make an inference based on similarity and social necessity.

With machines, we refuse to make that leap. Not because the logic is weaker, but because we know how the machine was made. Its origins break the spell.

This suggests that intelligence and consciousness are not just properties we detect but statuses we grant.

What this tells us

We may never resolve these questions with better benchmarks alone. The real issue is not whether machines are intelligent, but what we are willing to recognize as intelligence. We may need to wait until our own cognitive abilities are fully understood before the mystique of human intelligence disappears for us to become better judges of artificial intelligence.

And that, ultimately, tells us as much about human psychology and philosophy as it does about the machines themselves.

Related reading: Jeff Hawkins' On Intelligence explores how the brain works as a prediction machine. For practical guidance on working with LLMs, see my review of Co-Intelligence.